Rendered #2

Towards Accountability in the Development of Computing Technologies and Creative Media

Towards Accountability in the Development of Computing Technologies and Creative Media

Rendered #1 launched a few weeks before the death toll from COVID-19 in America hit 100,000. We’ve recently more than doubled that, and are showing no signs of slowing down. Like everyone else in the world, I’ve been watching this acutely American pandemic unfold from a computer screen, becoming a pseudo-expert in a domain that now dominates every moment of our waking life.

However, I’ve continued to compile articles and papers on all the latest advances and news I consider the purview of this newsletter. A week after the first issue was published the second issue was already drafted, and as I was putting the final touches on the piece I watched the police officer Derek Chauvin kill George Floyd.

I watched the protests afterwards, starting in Minneapolis and quickly making their way to NYC. I watched protesters march outside my window, and joined them a few days later. On Twitter I watched peaceful protests I attended during the day turn vicious at night, with police attacking non-violent protestors in protests against police violence. I waited for what I thought the “right time” would be to publish my newsletter of 3D technology. But the terrible reality is that I was waiting for a time that would never come, and never existed in the first place.

The violence perpetrated by the state against people of color and disenfranchised minorities in America has a history that spans the lifetime of this country, and continues to find new ways to manifest itself with each new generation. Outright slavery turns into Jim Crow, which turns into implicit segregation, which, aided by the GI bill, cuts anyone but white people out of the accumulation of generational wealth, which turns into police force to maintain the newly established suburbs, which turns into modern day militarized police, which turns into surveillance capitalism, and so on.

The violence continues, and, as a now nearly 500 person strong mailing list of people invested in pushing the technologies of digital representation and capture forward, I wanted to use the issue to highlight our past that places us squarely in the line of culpability for helping to perpetrate and enable systemic racism and discrimination across time, in the hopes that we can learn from our past actions to pave a more radically anti-racist future for ourselves and those that follow.

As someone who reads Rendered, it’s perhaps easy to see this issue as “over there,” where “politics happen.” However, to think that the creative/technical industries are not instruments of systemic racism and sexism is to be complicit in the exact structures that create the conditions people are on the street protesting against.

If this is news to you, I hope this newsletter can be a wakeup call. That you can understand how the technologies of computer vision, 3D rendering, and filmmaking are some of the biggest, most implicit perpetrators and profiteers of discrimination around.

This is also to say that, if you do not believe that Black Lives Matter, you will find no quarter here. Our industries should not be places shielded from the nature of race and politics. Further, you should recognize that every line of code you write is political, that every volumetric capture you take is political, that every render, compile, export, etc. takes place in a vast cultural network and contains within it all your own biases and opinions. To even claim that these complex cultural and technical systems are, or even can be, “without politics” is a racist/classist/sexist position.

For those on the fence, or for those who want more insight into this, I’m dedicating the rest of this newsletter to compiling some instances where our collective industries have perpetrated, enabled, and profited off of racism, even as recently as weeks ago. This is to show that these incidents are not merely one-offs, but part of a pattern the creative and technical community has been complicit in perpetrating throughout time, suggesting that a fundamental shift in how our industries operate is needed.

If you take nothing else from this newsletter, I’m calling for us to divest from military and police contracts, to divest from ICE, to divest from technologies that, even if we intend good, have the potential to be abused and maintain the abusive status of the hegemon. These are pro-active stances, that require an active conversation and reflection on not only what we are making, but how what we are making can (and will) be used to perpetrate racism and discrimination in America and beyond.

We need a Murphy’s Law of Systemic Discrimination - Everything that can be used to reinforce systemic discrimination and racism will be used to do so.

Exploring Systemic Racism and Discrimination through the Technical & Creative Community

Below I’ve compiled a (far from complete) list of specific incidents where the technical and creative community has not only been complicit in supporting discriminatory structures in society, but have also stuck to their guns when questioned about the potential impact of their work.

An IBM “Counting Machine”

IBM’s Enablement of Nazism

In their book IBM and the Holocaust, author Edwin Black breaks down the (very open and not hidden) connection between IBM and Nazi Germany. Though IBM regards some of the claims in the book as dubious, it has not denied the facts that IBM sold tons of their counting machines to the National Socialist German Workers Party (NSDAP), crucial in establishing the work of dehumanizing a populace and setting a precedent and pattern for the methods that would become core to the operation of the Nazi party itself.

IBM largely profited off of this endeavor (as did many other automobile manufactures like Mercedes-Benz), and in many ways is in the position it is today due to the lucrative work of selling technology to autocrats.

Microsoft, Amazon, Palantir, and ICE

This practice continues today. Companies like Microsoft, Amazon (via AWS), and Palantir sell software deals to government agencies like the United State’s Immigration and Customs Enforcement agency (ICE). These software deals, worth tens to hundreds of millions of dollars, provide crucial technical infrastructure that allows the organization to continue conducting its abhorrent behavior at the USA/Mexico border where it separates young children from their parents and keeps children in cages. Even as recently as a few months ago, when pressured to end Github’s contract with ICE, GitHub’s chief executive, Nat Friedman, said:

“Thank you for the question. Respectfully, we’re not going to be reconsidering this…Picking and choosing customers is not the approach that we take to these types of questions when it comes to influencing government policy”

The work of mass subjugation requires robust technological solutions and infrastructure, a need that all of the largest tech companies are gleefully ready to fill so long as the government continues helps to line their pockets.

Celluloid’s Bias Towards Whiteness

The chemically engineered light response of celluloid was, from the beginning, created with white people in mind as the “reference” or “normal” scenario. This assertion of whiteness-as-default will come up a few more times in this newsletter, but is exceptional in the context of celluloid as it is literally chemically engineered racism.

Film, as a medium and technical process, was developed as a way to make white or light skin not only look better, but to be able to “appear” at all as part of the development process. When color film is unable to accurately capture darker skin tones (or darker tones next to lighter tones, as the above video points out), erasure takes place. The whiteness of the image is centered, with other races pushed to the margins or disappearing from the developed image entirely.

Digital Sensor’s Bias towards Whiteness

Even as we move beyond celluloid and to digital cameras, the issues of cameras “seeing” other races (or multiple races in the same images), remains an issue. We gave up celluloid color chemistry, but in its wake rose imperfect digital sensors who, without the ability to capture the full color spectrum, relied on color LUTs to take the full range of possible colors and bake it down into a more manageable gamut.

Oftentimes standard LUTs favor white skin, and the process of photographing darker skin tones as a result needs extra consideration in a way white skin doesn’t.

Ava Duvernay, director of Selma and When They See Us (among other films), has spoke about this at length. In this video she goes over the easily skipped over nuances of shooting black skin in dark rooms and the steps she and her cinematographer have taken in order to do it right.

It also seems a cinematographic reckoning is happening right now, with the Guardian declaring a few years ago that “film finally learned to light black skin”.

Facial Recognition’s Bias

As we move beyond 2D images and into the realm of 3D, one would think that our capture technology would learn from past mistakes. However, the problem seems to never go away — It simply refocuses around newer technologies.

When Apple introduced Face ID, a Chinese woman found that it was unable to distinguish between her and her colleague. Black people were sometimes categorized as gorillas with Google’s facial recognition. This past month saw people experimenting with Twitter’s “content-aware cropping” to see how Twitter often favored the display of white people in cropped images instead of people of other races.

Facial Recognition in general is also a huge topic that I may cover in a future newsletter, but for others interested in some of the discourse occurring around the ethics of facial recognition, I encourage you to check out some of the pieces below:

When the Police Treat Software Like Magic

A Case for Banning Facial Recognition

It’s also worth saying that IBM and Amazon have made at least symbolic gestures towards ceasing Facial Recognition tech, but IBM is a bit of a non-player in the space, and Amazon’s tech is its whole stack, not just the cameras. The ACM itself has also called for “an immediate suspension of the current and future

private and governmental use of [facial recognition] technologies in all circumstances known or reasonably foreseeable to be prejudicial to established human and legal rights.” (thanks @schock).

The “Lena” Photo

Baking in Bias with “Test” Data

Lena

You would think after the sunsetting of the aforementioned Shirley card for 2D image making that the image making industry would have learned its lesson, opting instead for a more diverse and robust test battery for their developing technologies.

Nope.

Around the same time the Shirley card began its road to deprecation, the burgeoning field of computer vision/digital image processing was starting to find its legs. And how would this new research field test its image processing algorithms and cameras?

By choosing a photo a naked white woman from Playboy of course!

Not only this, but the use of “Lena” as a test image for image processing algorithms exists to this day, once again forward whiteness-as-default. The documentary Losing Lena goes into more detail on the history of the image, and burgeoning efforts to replace it.

Volumetric Capture

If 2D image making is biased by design, its hard to overstate how the 3D image amplifies the problem, both in the terms of the circumstance of its creation and what it is purporting to “capture”.

In his book 3D: History, Theory and Aesthetics of the Transplane Image, author Jens Schröter makes a distinction between 2D media having the ability to control only time (they are single moments, or series of single moments played in rapid succession to show a single thing as captured), and 3D capture/representation offering the ability to exert “spatial control” in addition to the “control of time”.

Which is to say that 3D image making is totalizing in its capture of time and space. However when this ideal meets reality, this “perfect image” inevitably falls over as we project our own biases and opinions onto the act of image creation. And yet, even with its obvious metaphorical (and sometimes literal) holes, we act as if each 3D image is not only a truth but the truth.

More tangibly, many depth capture solutions are impossibly bad at not only capturing a variety of different hairstyles, but are particularly bad at capturing the hair of African-American people (and people with similarly textured hair). The texture of this hair tends to reflect IR light back at the camera’s sensor, rendering a subject’s hair not just invisible, but as if it never existed at all. The data is simply not there.

Instead of trying to fix the problem, many volumetric capture companies instead dodge these constraints by choosing to showcase captures of bald Caucasian males or those with short cut hair, or Caucasian females with hair pulled back into a ponytail.

The thinking here is that, in order to show off your volumetric capture system, you need to provide demo data that represents the ideal type of subject for capture. So just as with celluloid, we’ve again arrived at the how “whiteness” and “white characteristics” are the ideal for capture. This implicitly pushes other races and human characteristics into a philosophically “non-ideal” zone.

I’ll also take this as a moment to say:

Stop using white women in bikinis to showcase your volumetric capture technology

This practice, as well as dodging the portrayal of BIPOC, is once again forwarding the notion of whiteness-as-default, or stereotypical “beautiful” bodies as the “ideal”. If we are making progressive technology, so should we be pursuing progressive social depictions in our technologies of representation. Instead, many companies pursue pin-up types of depictions of (white) women as their data, distributed to thousands of people in order to judge the capacity of their technology.

I don’t even need to name names here — these data sets can be publicly found at nearly every volumetric capture company’s downloads page.

Military Industrial Complex

Underpinning so many “emerging” technologies is the military industrial complex. Governments are often the entities that can fund years long research to develop new technologies of representation and control that can then be redeployed and used for military operations (read: wars).

A simple example of this is the famous Institute for Creative Technologies (ICT) at USC. Home to such luminous researchers as Paul Debevec, Hao Li, Skip Rizzo, the ICT was founded as a result of talks between high ranking Army officials and Disney executives where they explored how the Army could leverage Hollywood’s expertise in the fields of simulation technology and 3D rendering to produce better training and simulation software for the Army.

This also isn’t dead history. The ICT is, to this day, a “UARC” — A “University Affiliated Research Center,” which directly receives annual funding from the Department of Defense in order to continue its operations. As recently as 2017, the ICT received more than 10 million from the DoD (pictured below) — a small amount in comparison to some other institutions, but notably the only institution of the list that self-describes as a place for creative technology development. (In 2015 they received 26m).

It’s perhaps unsurprising then that ICT alum Palmer Luckey has now moved directly into the Defense sector after selling Oculus to Facebook.

This extends beyond software as well. Our dearly beloved depth sensors are largely outgrowths of research done at leading Israeli universities to forward surveillance technologies for the Israeli Defense Forces. As much as we may think of them as creative tools, depth sensors are fundamentally surveillance technologies meant to serve those who wish to surveil.

Digital Blackface

Screenshot of Fortnite

Fortnite

Even though we still catch our politicians in blackface in their college photos, the idea of someone openly blackfacing in public in 2020 seems unlikely. However, as new technology gives us new ways of expression, old tropes remediate themselves in the new mediums.

Fortnite came under fire a few years back in one of the most high-profile cases of digital blackface, where the creators of the Carlton and Milly Rock dances (as well as the creator of Flossing), sued Epic Games for stealing their dances, repackaging them for Fortnite, and selling them to their players without any royalties to the creators.

Though the creator of Flossing is white, for the Carlton and Milly Rock, created by former Fresh Prince of Bel-Air star Alfonso Ribeiro and rapper 2 Milly, respectively, Epic’s stealing of their dances was seen as the next incident in a long history of black culture being pilfered from and repackaged in more “palatable” versions for white audiences (see… Elvis). Players are able to pantomime blackness and black culture without taking on any of the risks of what it means to actually be part of those cultures in “real” life. See also, people using reaction gifs of black people because of their perceived outsized emotional performance, forward a narrative of black emotion as “excessive”.

FaceApp / Face Filters

“Trying on blackness” is the real underpinning of digital blackface in that it allows people to, risk-free, dip in and out of black culture without any negative side effects. It builds up incomplete models of understanding of the culture they are lifting from, and can result in a false sense of understanding in an area that has historically been disenfranchised and ignored, then working to repeat the same cycle of ignorance.

FaceApp came under rightful fire then when it allowed users to select a “Black,” “Indian,” “Asian,” or “Caucasian” filter for your face to see what you look like “as another race”. As impossibly on the nose bad this is, it also has the additional terrible-ness of needing to pick out individual features from those races that FaceApp deems intrinsic to that race. Which then begs the question, what if you’re someone from that race that doesn’t have those features? Does that mean you aren’t your own race? This also comes after FaceApp made a “Hot” filter that basically lightened your skin to make you look more white.

I bring this instance up as well because face replacement technology is non-trivially difficult (for now). It requires domain-experts in the field in order to implement as well as teams of people designing the app itself. These aren’t silly one-off apps made by students somewhere (though that would still be an issue), these are real apps made by real funded teams.

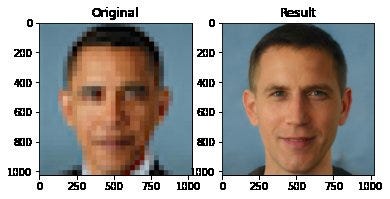

From Face Depixelizer

Conclusion

It’s no longer good enough to be “well-meaning”. Emerging technology can and will be used in ways that go beyond whatever cute scenario you’ve imagined for it, and as such we should be taking an active role in preventing these outcomes instead of accepting them as an inevitability.

I’m not talking about symbolic gestures or forming committees to form plans to form committees. I’m talking about baking these considerations into the act of software development, rendering, and creative production itself. Having product people actively considering this when planning new features, have developers talk about this as part of SCRUM, part of sprint scoping, part of QA testing, etc. I’m talking about radically adopting new norms around the culture of production that actively disavow anything that gives off even the slightest smell of discrimination, reinforcement of the hegemon, racism, etc.

Symbolic gestures fail to lead to meaningful change and instead act as smokescreens to try and pacify dissent in hopes that causes can be forgotten. The fact it’s been a few months since Floyd’s death already gives us examples of this, as police reform legislation introduced in the wake of protests in California is now being curtailed. New York passed monumental legislation to repeal 50-A (a bill that essentially blocked public access to police misconduct records), but police unions temporarily blocked the release of records (which were then released in part by ProPublica). De Blasio also publicly pledged to defund some part of the NYPD, but in reality simply reclassified some NYPD officers as being under the Department of Education, virtually maintaining the status quo. This is after repeated issues of misconduct that represent pattern behavior, not “a few bad apples”. And then, after cutting virtually no money on police spending, De Blasio announced that he was unable to payback the nearly one billion dollars owed to furloughed teachers. Only after pressure from the largest teacher union in NYC did they announce that they would pay back some of it.

The work needs to be tangible, and invested in an ongoing commitment to change. The work should question the norms of development, and find ways to avoid pathways that lead us back to where we started. Everest Pipkin and Ramsey Nasser approximate this by baking the consideration directly into software use through their recently written Anti-Capitalist software license, meant to “consider the organization licensing the software, how they operate in the world, and how the people involved relate to one another” and “empower individuals, collectives, worker-owned cooperatives, and nonprofits, while denying usage to those that exploit labor for profit.”

Groups like The Algorithmic Justice League are committed to longterm work to “raise public awareness about the impacts of AI, equip advocates with empirical research to bolster campaigns, build the voice and choice of most impacted communities, and galvanize researchers, policymakers, and industry practitioners to mitigate AI bias and harms.”

The status quo that has brought us here doesn’t have to continue to be the world that we live in. As the people making the technology, films, and games that the rest of the world sees and interacts with, we should hold ourselves to higher standards and find new models for development that center the concerns and needs of the disenfranchised and systemically discriminated and set a new tone for the dawning age of AI, XR, 3D Capture, ML, etc. The work is just beginning.